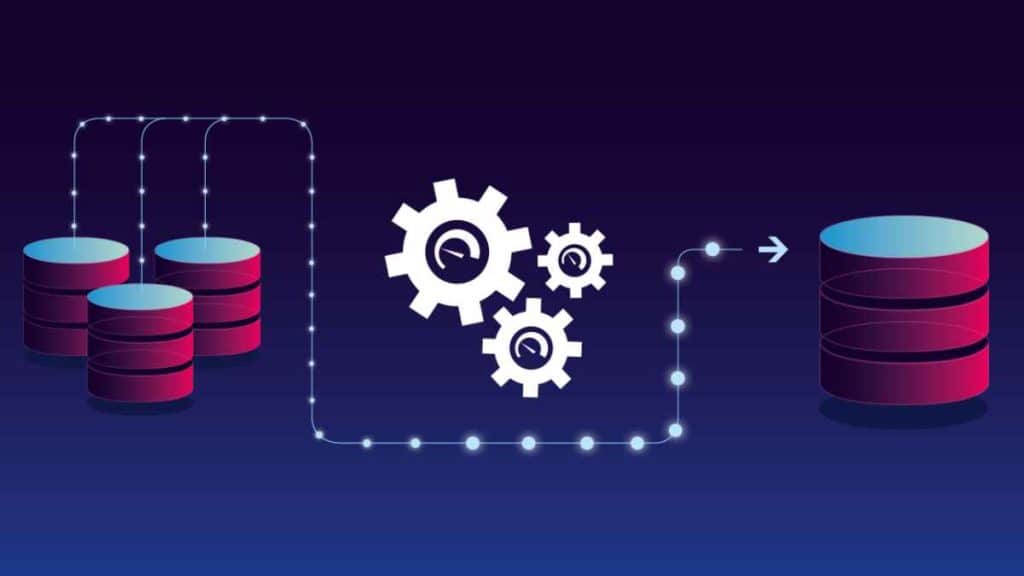

An ETL pipeline can be defined as a set of processes to extract data from a single source or multiple sources, transform it and load it into a target source like a data warehouse. ETL is an acronym for ‘Extract, Transform, Load’, three interdependent data integration processes. Once data passes through this process, it is ready for reporting, analysis and deriving insights.

ETL pipeline allows the automated gathering of data from a variety of sources and the transformation and consolidation of data into a single, high-performance store. Building an enterprise ETL pipeline is difficult, thus, solutions like Intellicus can simplify the process of ETL and automate everything.

The Basics of ETL Pipelines:

1. Extract:

The extraction phase involves gathering data from various sources. This step requires understanding the data sources and selecting the appropriate extraction methods to collect the relevant datasets.

2. Transform:

During the transformation phase, the extracted data is cleansed, validated and manipulated to meet specific requirements. This stage plays a vital role in ensuring data quality, consistency and compatibility.

3. Load:

The transformed data is loaded into a target system or data warehouse where it can be easily accessed for analysis, reporting and visualization. This stage often involves mapping the transformed data to a predefined schema or data model, which provides structure and organization for efficient storage and retrieval.

Benefits of using ETL pipeline

Storage expansion –

ETL allows you to save storage by lowering the amount of information kept in data warehouses. Businesses can optimize their costs to extend resources beyond the storage of data and invest in other avenues.

Well-developed Tools –

Since ETL has been around for a long time, there are a plethora of advanced platforms available for businesses to use for data integration. This allows ETL pipelines to be made and kept more easily.

Data Consistency –

ETL pipelines provide a standardized approach to transforming and consolidating data from disparate sources. This ensures consistency and eliminates data discrepancies, enabling reliable analysis and decision-making.

Business Agility –

By providing a streamlined data integration process, ETL pipelines enable organizations to quickly adapt to changing business requirements. New data sources can be easily incorporated into the pipeline, allowing businesses to leverage emerging technologies and stay ahead of the competition.

Automation –

ETL pipelines automate the entire data transformation process, reducing manual efforts and increasing efficiency. Once set up, the pipeline can be scheduled to run at specific intervals, ensuring timely data updates.

Types of ETL pipeline

ETL pipelines are differentiated based on latency and are categorized into two distinct processes: Batch processing pipeline and real-time processing.

1. Batch processing Pipeline –

Batch processing is used in traditional analytics and business intelligence where data is passed through the ETL process at regular intervals with minimal human intervention. Processing of large volumes of data in batches, typically daily or weekly, is accomplished quickly. Large amounts can be managed more efficiently when collected, transformed and transferred in batches.

2. Real-time ETL Pipeline –

This process enables the ingestion of structured or unstructured data from various sources including IoT devices, data silos, social media feed data and sensor devices in near-real-time. Data transformation is achieved through a real-time processing engine, which would help in executing real-time analytics, targeted marketing campaigns, predictive maintenance, etc. Data would be extracted from source systems, transformed in real-time and loaded onto a target repository, which forms a continuous process.

3. Incremental ETL Pipeline –

This is a fractional loading method that only processes a subset of rows, and it utilizes fewer resources. The source data that has changed or has been updated is uploaded to the target system. This type of pipeline is especially beneficial when the datasets are large and frequently changing.

4. Cloud ETL pipeline –

In the cloud ETL pipeline, extraction, transformation and loading occur from the source system to the target system with the use of cloud-based tools and services, running entirely in the cloud. This is useful when you want to process data stored in Cloud platforms and want to utilize the scale and flexibility of the cloud environment to your advantage.

Data pipeline v/s ETL pipeline

The terms ‘ETL pipeline’ and ‘data pipeline’ may be used interchangeably, but they are not the same things. The data pipeline is an umbrella term for all the data moved across systems, whereas an ETL pipeline is a particular type of data pipeline.

A data pipeline is a generalized set of processes for moving data from one system to another. The data may be transferred in real-time or in bulk and they can be used to move data between similar systems. An ETL pipeline is a specialized form of a data pipeline that is used to transfer data from multiple sources, convert it into the required format and load it into a target database.

A data pipeline involves the movement of data across data stores but through this, it is not necessary that transformation of data should occur. ETL pipelines include the transformation of data into a more useful and structured format before it is moved into a destination system.

Conclusion

ETL pipelines play a vital role in unlocking the true potential of data by ensuring its integrity, quality and accessibility. With the ability to extract, transform and load data from diverse sources, organizations can derive valuable insights and make data-driven decisions. As businesses continue to face data challenges, implementing robust ETL pipelines becomes essential for harnessing the power of information.